Tutorial: Vaccine Efficacy¶

This will be your first fully worked-out inference problem for the course. That being the case, we are going to avoid introducing a bunch of extraneous background about particular astrophysical models or data at the same time by instead using an example from medicine.

This is likely also the last time in the course that you will be evaluating posterior distributions on a grid, since in general this is horribly inefficient. Indeed, getting an appreciation for that fact is part of the reason we're going through it here. We will also use this tutorial as an opportunity to work through the process of finding maximum-likelihood estimates and confidence regions, since their calculation on a grid is mechanically quite similar to the Bayesian results. We will also demonstrate some of the alternative (non-grid) methods you'll work with later, although you will not have any tasks associated with them in this notebook.

Specifically, you will

- define a generative model for the scenario described

- apply Bayes' Law, evaluating the posterior over a grid in parameter space

- qualitatively evaluate the goodness of fit

- compute credible intervals and regions

- compute analogous results using the maximum-likelihood approach

# !pip install bcgs lmc incredible

from os import getcwd

from yaml import safe_load

import numpy as np

import scipy.stats as st

import matplotlib.pyplot as plt

%matplotlib inline

import bcgs

import lmc

import incredible as cr

thisTutorial = 'vaccine'

if getcwd() == '/content':

# assume we are in Colab, and the user's data directory is linked to their drive/Physics267_data

from google.colab import drive

drive.mount('/content/drive')

datapath = '/content/drive/MyDrive/Physics267_data/' + thisTutorial + '/'

else:

# assume we are running locally somewhere and have the data under ./data/

datapath = 'data/'

Background¶

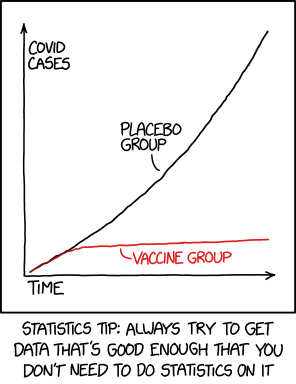

Among other things, the years 2020-2021 are notable for the number of people taking an close interest in the process of vaccine development and approval who ordinarily wouldn't. It came as a great relief to many when, in the autumn of 2020, pharmaceutical company Pfizer announced that they had developed a vaccine for Covid-19 that was safe and 95% effective.

The level of detail in this New York Times article is representative of reporting on both the Pfizer announcement and the later Moderna announcement. (The information publically released by AstraZeneca about their trials at the time, at least that I could find, was not sufficient to perform a similar analysis to the one we do below.)

Pfizer and BioNTech’s trial included nearly 44,000 volunteers, half of whom received the vaccine. The other half received a placebo shot of salt water. Then the researchers waited to see how many in each group developed Covid-19.

The companies said that out of 170 cases of Covid-19, 162 were in the placebo group, and eight were in the vaccine group.

Via xkcd.com. Thanks for the tip, but we're going to anyway. |

For those of a certain mindset, the reporting immediately raised three questions:

- What exactly does effectiveness mean in this context?

- What is the uncertainty on the 95%? (You might intuitively suspect it's large, given the small fraction of participants who tested positive at all.)

- Is it possible to answer these questions based only on the mass-media reports (i.e. without waiting for more official documentation)?

As to the first question above, we will use the following generative model, including a definition of effectiveness, which I claim is logical and which ends up yielding a best-fit effectiveness of 95%:

- Trial participants are split into control and treatment groups with sizes $N_\mathrm{c,tot}$ and $N_\mathrm{t,tot}$. We don't know precisely what these values are, but will probably be ok assuming that they are both 22,000.

- Let $P_\mathrm{exp}$ be the average probability that one of the control participants is exposed to Covid-19 and becomes infected and is tested for Covid-19 (surveillance testing not being in place at this point in time) and tests positive. $N_\mathrm{c,pos}$ of them do so.

- We will assume that the control and treatment groups are similar enough that this average probability would also apply to members of the treatment group if they had not been vaccinated. That is, it is the probability that they are sufficiently exposed to Covid-19 that they would have become ill and tested positive had they not been vaccinated. There is a corresponding number of treatment-arm participants, $N_\mathrm{t,exp}$, who have this level of exposure but do not necessarily become ill, get tested and/or test positive, due to vaccination.

- For the members of the treatment with this level of exposure, the probability that they do not go on to become ill and test positive is $P_\mathrm{vac}$. This is the "effectiveness", essentially the fractional reduction in the number of particpants who test positive, comparing the control and treatment groups. Conversely, these highly exposued treatment-group participants have a probability $1-P_\mathrm{vac}$ of testing positive despite vaccination. The number that do is $N_\mathrm{t,pos}$.

(To be clear, I am not claiming that either the company or the FDA plugged this model through a Bayesian analysis like we will. Almost certainly they didn't, although I suspect the method they did use is an approximation to what we will do.)

Answering the second and third questions is, of course, the point of the remainder of this notebook.

Specify the model and priors¶

The bullet-point model above is a bit convoluted. Naturally, your first job is to map out this model as a PGM and fully specify it in generative form. To help, here are the quantities mentioned above that are explicitly given in the article:

Nc_tot: total number in control arm

Nt_tot: total number in treatment arm

Nc_pos: number of positive cases in control arm

Nt_pos: number of positive cases in treatment arm

As usual, however, you will be analyzing your own, unique (albeit fictional) data. Let's read that in.

data = safe_load(open(datapath+'data.yaml', 'r').read())

data

In addition to these quantities, we will have to account for

Pexp: probability of exposure/vulnerability resulting in positivity WITHOUT treatment

Nt_exp: number in the treatment branch so exposed/vulnerable

Pvac: probability of one of them NOT testing positive

which are not given. These are either parameters of interest or things we need to marginalize over, depending on your point of view. For the sake of organization, let's define the following canonical order for these parameters:

param_names = ['Pexp', 'Nt_exp', 'Pvac']

Finally, you will need to define and justify priors for $P_\mathrm{exp}$ and $P_\mathrm{vac}$. As always, the overriding concern when chosing a prior is that it be defensible. However, in this case, please adopt priors from the Beta family of distributions, which is defined over the real interval [0,1] and is reasonably flexible. (This restriction will make it simpler for us to check your work, and allow us to demonstrate the use of conjugate Gibbs sampling with this problem, far below.)

Note: In class we will discuss and agree on a common set of priors that everyone should use.

Space for PGM and generative model

Define the hyperparameter values ($\alpha$ and $\beta$ for the two Beta distribution priors) here:

# hyperparams = {'Pexp_alpha': ..., 'Pexp_beta':..., 'Pvac_alpha':..., 'Pvac_beta': ...}

# YOUR CODE HERE

raise NotImplementedError()

We'll visualize the priors below:

print('Beta distribution prior hyperparameters for Pexp:', hyperparams['Pexp_alpha'], hyperparams['Pexp_beta'])

print('Beta distribution prior hyperparameters for Pvac:', hyperparams['Pvac_alpha'], hyperparams['Pvac_beta'])

plt.rcParams['figure.figsize'] = (10.0, 3.0)

fig, ax = plt.subplots(1,2)

xx = np.linspace(0.0, 1.0, 1000)

ax[0].plot(xx, st.beta.pdf(xx, hyperparams['Pexp_alpha'], hyperparams['Pexp_beta']));

ax[0].set_xlabel(r'$P_\mathrm{exp}$'); ax[0].set_ylabel(r'$p(P_\mathrm{exp})$');

ax[1].plot(xx, st.beta.pdf(xx, hyperparams['Pvac_alpha'], hyperparams['Pvac_beta']));

ax[1].set_xlabel(r'$P_\mathrm{vac}$'); ax[1].set_ylabel(r'$p(P_\mathrm{vac})$');

Solution on a grid¶

Before moving on, it will be useful to have a simple, common-sense guess at the parameter values, based on the reported information. Go ahead and fill in the dictionary below, and eventually you'll find out if your simple estimates were close. (At least for my definition of "common sense", the guessed value of $P_\mathrm{vac}$ based on the real data comes out to about 95.1%.) Do remember that $N_\mathrm{t,exp}$ must be an integer.

# guess = {'Pexp':..., 'Nt_exp':..., 'Pvac':...}

# YOUR CODE HERE

raise NotImplementedError()

guess

Implement and benchmark the posterior calculation¶

It's time to write functions to evaluate the prior, sampling and posterior distributions.

Normally, we try to evaluate the log of these distributions. This is because floating-point underflows can be an issue, especially when the sampling distribution is a product with many terms. (That won't be the case in this problem, but happens naturally when the data consist of many measurements.) Another benefit is that we don't have to worry about normalizing coefficients that don't depend on model parameters, unless we wanted to compute the absolute value of the evidence for some reason. Since we'll be evaluating over a grid in this notebook, we could always normalize the posterior later by dividing it by its numerical integral.

If any of these functions should return zero probability, you can and should have them return a log-probability of $-\infty$. This includes cases where parameters are somehow out of bounds or would cause numerical errors, e.g. if a negative value of $P_\mathrm{vac}$ is passed. Our solution will be on a grid, so it would be easy to simply not include any pathological values in the range to be explored. However, we will soon move to using algorithms that might ask for an evaluation at any value, in principle, so it's a good habit to watch out for cases that might crash your code and handle them proactively. scipy.stats functions usually deal with this kind of checking automatically, which can simplify things.

Anyway, complete the following function for evaluating the log-prior, which should work for any choice of the hyperparameters:

def log_prior(Pexp, Nt_exp, Pvac, Pexp_alpha, Pexp_beta, Pvac_alpha, Pvac_beta):

# YOUR CODE HERE

raise NotImplementedError()

As always, we should make sure it returns some finite value instead of crashing when fed a reasonable parameter dictionary. We can just use guess for this type of check.

log_prior(**guess, **hyperparams)

Next, implement and test the log-likelihood/sampling distribution. Please note and do not change the line below where we round the value of Nt_exp. Notionally it's of course an error for this parameter to be non-integer, but the rounding will let us be a little sloppy with, e.g., defining evenly spaced parameter values over which to test the posterior distribution.

def log_likelihood(data, Pexp, Nt_exp, Pvac, **unused_kwargs):

# unused_kwargs is there so we can pass hyperparameters without crashing,

# not that we would/could use them in the likelihood

Nt_exp = np.round(Nt_exp) # just in case we are passed a non-integer

# YOUR CODE HERE

raise NotImplementedError()

log_likelihood(data, **guess)

Finally, the log-posterior. As will often be the case, we will just return the sum of the log-prior and log-likelihood, i.e. we will neglect the normalizing constant (evidence), which is constant with repect to the model parameters.

The construction below is a good one to be in the habit of using. Usually, the likelihood is much more expensive to compute than the prior, and might even crash if passed prior-incompatible parameter values. So it's worth the extra check of a non-zero prior probability before attempting to evaluate the likelihood.

def log_posterior(data, **allparams):

lnp = log_prior(**allparams)

if np.isfinite(lnp):

lnp += log_likelihood(data, **allparams)

return lnp

Let's do some basic checks that it works as intended. This will also check that your guess has a non-zero posterior probability; if it doesn't there's either a bug or your guess should be revisited.

print(log_posterior(data, **guess, **hyperparams))

assert log_posterior(data, **guess, **hyperparams) == log_prior(**guess, **hyperparams) + log_likelihood(data, **guess)

assert np.isfinite(log_posterior(data, **guess, **hyperparams))

We can also do a check of the key functions against our solutions, for arbitrary parameter/hyperparameter values (since we don't know whether your functions are normalized, this is done in terms of differences in each function):

post_test = safe_load(open(datapath+'post_test.yaml', 'r').read())

assert np.isclose(log_prior(3e-4, 100, 0.9, 2.0, 10.0, 10.0, 2.0)-log_prior(6e-4, 200, 0.99, 2.0, 5.0, 5.0, 2.0), post_test['prior'])

assert np.isclose(log_likelihood(data, 3e-4, 100, 0.9)-log_likelihood(data, 6e-4, 200, 0.99), post_test['like'])

Assuming all is well so far, let's get an idea of how fast the posterior calculation is:

%%time

for i in range(1000):

log_posterior(data, **guess, **hyperparams)

My particular implementation, on my aging laptop, seems to be taking about 0.8ms per evaluation. (It's easily possible to speed this up by being clever about Python optimization, but never mind.)

Let's call this a round 1ms per evaluation. Imagining that we'd like a grid running over 100 values for each parameter ($10^6$ grid points in total) to really map out the posterior well, we'll be waiting more than 15 minutes. That assumes that we know what part of parameter space the posterior will be non-tiny in, but in any case 15 minutes is not a big deal in the scheme of things.

On the other hand, there are plenty of data sets where evaluating the likelihood involves serious computation, requiring seconds or (but hopefully not!) minutes per evaluation. As you can imagine, we'll be looking for less brute-force approaches than a grid evaluation in those cases. Even when the likelihood is fast, the exponential scaling of the grid size with the number of parameters (i.e. it's dimensionality) rapidly gets out of hand. For example, here's how the time to evaluate our 1ms posterior over a 100-value-per-parameter grid grows with the number of parameters:

for npar in [3, 6, 9]:

t = 1e-3*100.**npar

print("For", npar, "parameters, ", t, "seconds =", t/60, "minutes =", t/3600, "hours =", t/(3600*24*365.25), "years")

which, to be clear, is longer than any of our notebooks should take to run.

Evaluate on a course grid¶

Bearing all that in mind, let's go ahead and do a quick run over a grid of size 10 in each parameter. Though we have a guess at what values of each parameter will be favored by the data, we still don't know how far in any direction in parameter space we need to go to encompass most of the posterior probability. A quick exploraration will help that, and then we can run a finer grid afterwards.

Fill in some minimum and maximum values for each parameter below, but please don't change the value of size.

size = 10

# Pexp_values = np.linspace(..., ..., size)

# Nt_exp_values = np.linspace(..., ..., size)

# Pvac_values = np.linspace(..., ..., size)

# YOUR CODE HERE

raise NotImplementedError()

Next we'll evaluate the posterior over all combinations of the parameter values above. The function below is probably not the most efficient way of coding this (and certainly not the shortest way), but is at least explicit.

def run_grid(Pexp_values, Nt_exp_values, Pvac_values):

res = np.empty((len(Pexp_values), len(Nt_exp_values), len(Pvac_values)))

for i,Pexp in enumerate(Pexp_values):

for j,Nt_exp in enumerate(Nt_exp_values):

for k,Pvac in enumerate(Pvac_values):

res[i,j,k] = log_posterior(data, Pexp=Pexp, Nt_exp=Nt_exp, Pvac=Pvac, **hyperparams)

return res

Based on the numbers above, this course grid should only take about 1s to run.

%%time

lnpost_grid = run_grid(Pexp_values, Nt_exp_values, Pvac_values)

Next, we want to see how well the grid bounds you chose enclose the posterior distribution. If the posterior is not tiny at the edge of the grid, and that edge doesn't correspond to a fundamental limit like $P_\mathrm{vac}=1.0$, you'll want to go back and change the grid extent. We'll also get a sense of how poorly this course grid captures the shape of the posterior.

The simplest way to go about this is to compute the marginalized 1D posteriors of each parameter on the grid, and look at them. Since we'll want to do this again with a finer grid, it's worth writing a quick function to do so. The particular format for the return value described below will allow us to take advantage of existing code to compute credible intervals from the results.

# This function should return a list, with one entry for each of the parameters (in canonical order).

# Each entry should be a dictionary with

# 'x': the array of grid values for that parameter

# 'density': a corresponding array of the 1D marginalized posterior PDF (not log-PDF) for that parameter. It does not need to be normalized.

def get_marg1d(lnpost_grid, Pexp_values, Nt_exp_values, Pvac_values):

# return [{'x':Pexp_values, 'density':...}, ...]

# YOUR CODE HERE

raise NotImplementedError()

Let's run it

marg1d = get_marg1d(lnpost_grid, Pexp_values, Nt_exp_values, Pvac_values)

... and plot each 1D distribution:

def plot_marg1d(marg1d, symb='o'):

plt.rcParams['figure.figsize'] = (20.0, 4.0)

fig, ax = plt.subplots(1,len(param_names))

for i,name in enumerate(param_names):

ax[i].plot(marg1d[i]['x'], marg1d[i]['density'], symb);

ax[i].set_xlabel(name);

ax[i].set_ylabel('p('+name+'|data)');

plot_marg1d(marg1d);

Hopefully the plots above give you an idea of where in parameter space the posterior is large, and where it drops to about zero, at least after marginalization. If not, this is a good point to go back and adjust the size of the grid. With only 10 points in each of these, it's unlikely that we're confident in the shape of the distribution being mapped out, which is why we'll run a finer grid now that we have a better idea of what its extent should be.

Before doing so, let's continue through the exercise of computing the 1D credible intervals and 2D credible regions from the course grid. It will probably not look pretty, but we can re-use these functions with the finer grid in a minute. The (complete) function below plugs your marginalized distributions into functions you've seen in the Credible Regions tutorial.

def plot_ci1d(marg1d):

plt.rcParams['figure.figsize'] = (20.0, 4.0)

fig, ax = plt.subplots(1,len(param_names))

CIs = []

for i,name in enumerate(param_names):

CIs.append( cr.whist_ci(marg1d[i], plot=ax[i]) )

ax[i].set_xlabel(name);

ax[i].set_ylabel('p('+name+'|data)');

return CIs

plot_ci1d(marg1d);

Chances are you can see the limitations of the course grid pretty clearly here.

Now we'll do something similar with the 2D marginalized posterior distributions, corresponding to each combination of 2 of the 3 parameters. As before, complete the function below by computing the marginalized distributions on a grid.

Note: The reversal of 'x', 'y' and 'names', compared with our usual parameter order, is correct for what I thought was the simplest possible way to perform the marginalization. Please compare the resulting 2D plots with the 1D plots above to make sure that the axes have not gotten confused!

# Analogous to above, this function returns a list of the three 2D marginalized posteriors.

# For concreteness, put these in the order: Pexp-Nt_exp, Pexp-Pvac, Nt_exp-Pvac.

# Note that now each dictionary's 'x' and 'y' will be a 1D array of parameter values, while its 'z' will be a 2D array of posterior probabilities.

# For convenience/clarity, also store the names of the two parameters corresponding to each distribution as shown.

def get_marg2d(lnpost_grid, Pexp_values, Nt_exp_values, Pvac_values):

# return [{'y':Pexp_values, 'x':Nt_exp_values, 'z':..., 'names':[param_names[0], param_names[1]]}, ...]

# YOUR CODE HERE

raise NotImplementedError()

marg2d = get_marg2d(lnpost_grid, Pexp_values, Nt_exp_values, Pvac_values)

Let's see what the credible regions based on this grid look like:

def plot_ci2d(marg2d):

plt.rcParams['figure.figsize'] = (20.0, 4.0)

fig, ax = plt.subplots(1,len(marg2d))

for i,m in enumerate(marg2d):

cr.whist2d_ci(m, plot=ax[i]);

ax[i].set_xlabel(m['names'][0]);

ax[i].set_ylabel(m['names'][1]);

plot_ci2d(marg2d);

Again, you can probably see the impact of the grid's courseness on the results. We definitely wouldn't want to use this initial grid evaluation to draw conclusions if we could help it, but we should now have enough information to define a finer grid, below.

Evaluate on a finer grid¶

Now that you know how to work with inference on a grid, let's invest a few cycles in evaluating the posterior over a finer grid. Define the extent and size of the grid below based on your results above. The goal is for the grid to encompass the region in parameter space where the posterior is not very close to zero, so that we are not missing a significant amount of the distribution, while also being dense enough to capture its shape, so that our integrations (marginalizations) will be accurate. Something like size of 30-40 is probably plenty for our purposes, though fewer than we'd want for a publishable result. Come back and experiment after seeing the results below if you have any doubts.

# size = ...

# Pexp_values = np.linspace(..., ..., size)

# Nt_exp_values = np.linspace(..., ..., size)

# Pvac_values = np.linspace(..., ..., size)

# YOUR CODE HERE

raise NotImplementedError()

%%time

lnpost_grid = run_grid(Pexp_values, Nt_exp_values, Pvac_values)

As before, let's compute and plot the 1D marginalized posteriors ...

marg1d = get_marg1d(lnpost_grid, Pexp_values, Nt_exp_values, Pvac_values)

plot_marg1d(marg1d);

... and their credible intervals ...

CIs = plot_ci1d(marg1d);

CIs

... as well as the 2D marginalized credible regions ...

marg2d = get_marg2d(lnpost_grid, Pexp_values, Nt_exp_values, Pvac_values)

plot_ci2d(marg2d);

In my solution (using size=30) the impact of the grid spacing on the 1D CIs is still pretty clear, although the 1D PDFs themselves are now sampled well enough that you can imagine interpolating them to do a better job of determining the CIs. In practice, we would instead simply use one of the (faster) non-grid methods outlined at the end of the notebook, however.

In any case, don't worry about making these results look absolutely perfect. They should look a good deal better than the course grid did, but perfection from an on-the-grid analysis is not worth waiting for when there are better methods available. Instead, we turn to the always-necessary question of whether the model provides an acceptable description of the data.

Note: If you are looking for confirmation that your marginalizations and CIs are correct, jump forward to the Gibbs sampling solution (which is given and in which we are extremely confident), and then check out the comparison at the beginning of the final section.

Questions to ponder/experiment with: the influence of priors¶

Comparing your 1D marginalized posteriors with the corresponding priors, for which parameter(s) do you thin the choice of prior might have been at all significant?

How much does the CI for $p_\mathrm{vac}$ change for different, plausibly "uninformative" choices of prior? How informative would the prior need to be to change the posterior at a level that actually affects our conclusions, compared with a uniform prior?

Space to ponder

Goodness of fit¶

Given that the data and model are relatively simple in this case - it would be surprising if the model couldn't fit the data for some values of the parameters - let's keep the goodness of fit check simple. Specifically, we'll just compare the given values of $N_\mathrm{c,pos}$ and $N_\mathrm{t,pos}$ to predictions from the mode of the posterior distribution. Typically we would want our predictions to also reflect uncertainty in the parameter values, and would therefore marginalize over the posterior; that will be much more straightforward when we start using monte carlo methods later on.

First we find the mode, which is the set of parameter values corresponding to the maximum of the 3D posterior grid. The code to do so is not the most interesting or educational, so it's given.

j = np.unravel_index(lnpost_grid.argmax(), lnpost_grid.shape)

mode = {'Pexp':Pexp_values[j[0]], 'Nt_exp':np.round(Nt_exp_values[j[1]]), 'Pvac':Pvac_values[j[2]]}

mode

Next, define ranges of values for $N_\mathrm{c,pos}$ and $N_\mathrm{t,pos}$, and evaluate the PMF for each of them, given the modal parameter values above. Make sure the ranges are wide enough to encompass most of the probability (see the plots made in the following cell).

# Nc_pos_values = np.arange(...)

# Nc_pos_pppmf = ... # P(Nc_pos|modal Pexp, Nt_exp, Pvac)

# Nt_pos_values = np.arange(...)

# Nt_pos_pppmf = ... # P(Nt_pos|modal Pexp, Nt_exp, Pvac)

# YOUR CODE HERE

raise NotImplementedError()

For this comparison to be meaningful, we need to be confident that we are looking at essentially the entire PMF in this case, so the following cell checks that the arrays you filled in above are close to normalized.

assert np.isclose(Nc_pos_pppmf.sum(), 1.0, atol=1e-2)

assert np.isclose(Nt_pos_pppmf.sum(), 1.0, atol=1e-2)

This will plot the posterior-predicted PMFs you just computed to the measured values (vertical lines).

plt.rcParams['figure.figsize'] = (10.0, 3.0)

fig, ax = plt.subplots(1,2)

ax[0].plot(Nc_pos_values, Nc_pos_pppmf, '.');

ax[0].set_xlabel('Nc_pos\''); ax[0].set_ylabel('P(Nc_pos\'|mode)'); ax[0].axvline(data['Nc_pos'], color='C1');

ax[1].plot(Nt_pos_values, Nt_pos_pppmf, '.');

ax[1].set_xlabel('Nt_pos\''); ax[1].set_ylabel('P(Nt_pos\'|mode)'); ax[1].axvline(data['Nt_pos'], color='C1');

You should be seeing relatively high probabilities at the measured values. If not, this would be an indication that the model is not a good choice, or that something is wrong in the analysis (like the grid containing only parameter values with small posterior probabilities).

The following will do a simple "probability to exceed" type test to confirm that the observed data are not in the tails of the predicted distribution. (It is likely not robust to pathological gridding choices, to do eyeball the plots above instead of relying on this.)

pte_c = np.where(Nc_pos_values>data['Nc_pos'], 0, Nc_pos_pppmf).sum() / Nc_pos_pppmf.sum()

assert pte_c > 0.05 and pte_c < 0.95

pte_t = np.where(Nt_pos_values>data['Nt_pos'], 0, Nt_pos_pppmf).sum() / Nt_pos_pppmf.sum()

assert pte_t > 0.05 and pte_t < 0.95

Solution with maximum likelihood (on a grid)¶

At the end of this notebook, we'll show what a non-grid-based Bayesian analysis of this problem might look like. Before that, let's take this opportunity to practice implementing a frequentist maximum likelihood analysis.

Just like Bayes on a grid, maximum likelihood on a grid will suffer from the limited resolution with which we are mapping out the parameter space. Also similarly, the grid approach is not the only one possible. In the case of maximum likelihood, the alternative would involve doing a large number of numerical minimizations to map out the various profile likelihoods (see the Frequentism notes). We haven't tried that for this problem, so it may or may not be more efficient than the grid.

In any case, our first task is to... run another grid, this time evaluating only the log-likelihood.

def run_like_grid(Pexp_values, Nt_exp_values, Pvac_values):

res = np.empty((len(Pexp_values), len(Nt_exp_values), len(Pvac_values)))

for i,Pexp in enumerate(Pexp_values):

for j,Nt_exp in enumerate(Nt_exp_values):

for k,Pvac in enumerate(Pvac_values):

res[i,j,k] = log_likelihood(data, Pexp=Pexp, Nt_exp=Nt_exp, Pvac=Pvac)

return res

%%time

lnlike_grid = run_like_grid(Pexp_values, Nt_exp_values, Pvac_values)

Next, compute the 1D profile likelihoods we'll need to find the 1D confidence intervals for each parameter. The function prototypes below are intentionally similar to those we used in the previous section.

Ultimately what we need for the CIs is not the profile likelihood itself, but $\Delta S=S-S_\mathrm{min}$, where $S=-2\log(\mathrm{profile~likelihood})$, so let's skip directly to storing that.

# This function is somewhat analogous to get_marg1d. Instead of finding each marginalized 1D posterior, you will compute the

# the array of S-Smin corresponding to the profile likelihood for each parameter.

def get_deltaS1d(lnlike_grid, Pexp_values, Nt_exp_values, Pvac_values):

# return [{'x':Pexp_values, 'dS':...}, ...]

# YOUR CODE HERE

raise NotImplementedError()

dS1d = get_deltaS1d(lnlike_grid, Pexp_values, Nt_exp_values, Pvac_values)

Analogously to the Bayesian analysis, we'll find 68.3% and 95.4% CIs. Remind us of the values of $\Delta S$ that define these in the maximum likelihood framework.

# dS1d_thresholds = [..., ...]

# YOUR CODE HERE

raise NotImplementedError()

Let's see where these thresholds fall in the arrays of $\Delta S$. We should be containing all the CIs within the existing grid, unless the prior was for some reason very influential, shifting the posterior with respect to the likelihood.

def plot_ml_1d(dS1d, symb='o'):

plt.rcParams['figure.figsize'] = (20.0, 4.0)

fig, ax = plt.subplots(1,len(param_names))

for i,name in enumerate(param_names):

ax[i].plot(dS1d[i]['x'], dS1d[i]['dS'], symb);

ax[i].set_xlabel(name);

ax[i].set_ylabel(r'$S - S_\mathrm{min}$');

ax[i].axhline(dS1d_thresholds[0], color='C1');

ax[i].axhline(dS1d_thresholds[1], color='C1');

plot_ml_1d(dS1d);

Write a quick function to extract the maximum likelihood estimate and 1D CIs for each parameter.

# This function should find the maximum-likelihood estimate and 68.3% and 95.4% confidence intervals for each parameter.

# For uniformity/convenience, let's store these in a similar format to 'CIs', which was created for the Bayesian analysis.

# That is, as a list of dictionaries containing:

# 'estimate': the maximum likelihood estimate

# 'min': list containing the lower bound of the 2 confidence intervals specified above

# 'max': list containing the upper bound of the 2 confidence intervals specified above

# The interval bounds won't fall exactly on a grid point, of course, so for concreteness and simplicity let's define the intervals as the range of

# grid points satisfying S-Smin <= threshold, rather than trying to be more precise.

def get_ml_ci1d(dS1d):

# return [{'estimate':..., 'min':[..., ...], 'max':[..., ...]}, ...]

# YOUR CODE HERE

raise NotImplementedError()

Let's see what they are:

CIs_ml = get_ml_ci1d(dS1d)

CIs_ml

Similarly, complete the function below to calculate the 2D $\Delta S$ grids (as a proxy for profile likelihoods).

# Again, similarly, to the Bayesian case, this function should return the 2D profile likelihood corresponding to each pair of

# parameters (order: Pexp-Nt_exp, Pexp-Pvac, Nt_exp-Pvac).

# Just like above, encode it as S-Smin (under the dictionary key 'dS') rather than the profile likelihood itself.

def get_deltaS2d(lnlike_grid, Pexp_values, Nt_exp_values, Pvac_values):

#return [{'x':Pexp_values, 'y':Nt_exp_values, 'dS':..., 'names':[param_names[0], param_names[1]]}, ...]

# YOUR CODE HERE

raise NotImplementedError()

dS2d = get_deltaS2d(lnlike_grid, Pexp_values, Nt_exp_values, Pvac_values)

Fill in the $\Delta S$ thresholds appropriate for 2D 68.3% and 95.4% confidence regions:

# dS2d_thresholds = [..., ...]

# YOUR CODE HERE

raise NotImplementedError()

Let's see what those regions look like:

def plot_ml_2d(dS2d, marg2d=None):

if marg2d is None:

ls = 'solid'

col = 'C0'

else:

ls = 'dashed'

col = 'C1'

plt.rcParams['figure.figsize'] = (20.0, 4.0)

fig, ax = plt.subplots(1,len(param_names))

for i,m in enumerate(dS2d):

if marg2d is not None:

cr.whist2d_ci(marg2d[i], plot=ax[i]);

ax[i].contour(m['x'], m['y'], m['dS'], [2.3, 6.18], colors=col, linestyles=ls);

ax[i].set_xlabel(m['names'][0]);

ax[i].set_ylabel(m['names'][1]);

plot_ml_2d(dS2d)

This last cell will overlay the frequentist confidence regions (dashed orange contours) and Bayesian credible regions (solid black). You should see good agreement (in this case) if you chose uniform priors for the Bayesian analysis; otherwise, possibly not.

plot_ml_2d(dS2d, marg2d)

We'll do a final comparison of the 1D parameter constraints at the end. But first...

Solution with monte carlo sampling¶

We've emphasized above that evaluating things on a grid is not the solution of choice in general, typically becoming unweildy any time we have more than 2 parameters to fit. It seems unreasonable to end this tutorial without at least showing you what a better solution would look like. There is no code for you to complete below, but since we'll be demonstrating two methods that you'll be implementing/using later, it's worth following along.

Both of these methods are flavors of Markov Chain Monte Carlo (MCMC), which you'll read about soon. In brief, they act as special random number generators to produce sets of parameter values sampled from the posterior distribution. However, the ways they go about accomplishing this differ.

Naturally, there are no finite-resolution affects from the imposition of a grid when using these methods. There are still potentially resolution affects from making histograms of samples (see the Credible Regions tutorial) as well as from the finite number of samples. In principle, both of these can be mitigated by simple generating more samples, with the advantage that these methods by design explore the part of the parameter space where the posterior is large, with minimal human guidance.

Conjugate Gibbs sampling¶

In Gibbs sampling, only 1 parameter is changed at a time, so in subsequent samples all but 1 of the parameters will be identical. The advantage to this is that, for some data/models, these single-parameter updates are fully analytic, much like the simpler problems we worked so far had fully analytic expressions for the posterior distribution. Here the full-dimensional posterior does not have an analytic solution, but conjugacies do allow us to design an efficient monte carlo sampler. (More in the Monte Carlo Sampling notes later...)

Given that this approach is only efficient for a subset of problems anyway, there exists specialized software that allows the user to define a model in pseudocode instead of, e.g., writing a log-posterior function. This is convenient, as it reduces the potential for coding errors. Less conveniently, the industry standard (called JAGS) is not available as pure Python, so its installation and use is more complex than we'd like for this class. Instead, we will use a much stupider package called BCGS, which you should absolutely not rely on in general. But it works for this case, and will give you a sense of things.

If you stare at the generative model for long enough (perhaps coming back after reading the Monte Carlo Sampling notes), you will find that the fully conditional posteriors for $P_\mathrm{exp}$ and $Q_\mathrm{vac}=1-P_\mathrm{vac}$ can be written down using conjugacy. The distribution for $N_\mathrm{t,exp}$ cannot, however. JAGS would recognize this and fall back on a more general sampling method, but BCGS is, as mentioned above, stupid. We therefore must provide the class below, which has a function to find the conditional distribution of $N_\mathrm{t,exp}$ and update it by brute force.

class Ntexp_type(bcgs.Binomial):

def __init__(self, *args, **kwargs):

bcgs.Binomial.__init__(self, *args, **kwargs)

self.update_overriden = True

# self.Qvac must be set later

def _update(self):

Ns = np.arange(self.targets[0].value, guess['Nt_exp']*3.0) # quick/dirty guess at what should be a really comfortable upper limit

lnps = st.binom.logpmf(Ns, n=self.n.value, p=self.p.value) + st.binom.logpmf(self.targets[0].value, n=Ns, p=self.Qvac.value)

ps = np.exp(lnps - lnps.max())

ps /= ps.sum()

self.value = np.random.choice(Ns, p=ps)

With that awkwardness dealt with, we define each parameter of the model, including what PDFs and priors are involved, and providing measured or guessed values as appropriate. Details of the syntax aside, the idea is that this should be simple to code up once the model itself is fully specified, as we did at the start of the notebook.

model = bcgs.Model()

model.add('alpha_e', bcgs.Constant(hyperparams['Pexp_alpha']))

model.add('beta_e', bcgs.Constant(hyperparams['Pexp_beta']))

model.add('Pexp', bcgs.Beta(alpha=model.alpha_e, beta=model.beta_e, value=guess['Pexp']))

model.add("Nc_tot", bcgs.Constant(data['Nc_tot']))

model.add("Nc_pos", bcgs.Binomial(p=model.Pexp, n=model.Nc_tot, data=data['Nc_pos']))

model.add("Nt_tot", bcgs.Constant(data['Nt_tot']))

model.add("Nt_exp", Ntexp_type(p=model.Pexp, n=model.Nt_tot, value=guess['Nt_exp']))

As alluded to above, we will actually get samples of $Q_\mathrm{vac}=1-P_\mathrm{vac}$ instead of $P_\mathrm{vac}$. A nice feature of sampling techniques is that these can be transformed from one to the other trivially, once we have our samples. We do need to make sure the prior is still what we decided on earlier, though.

model.add('alpha_v', bcgs.Constant(hyperparams['Pvac_beta'])) # intentional swap of alpha and beta, since we are sampling Qvac

model.add('beta_v', bcgs.Constant(hyperparams['Pvac_alpha'])) # cf Beta distribution definition

model.add('Qvac', bcgs.Beta(alpha=model.alpha_v, beta=model.beta_v, value=1.0-guess['Pvac']))

model.Nt_exp.Qvac = model.Qvac

model.add("Nt_pos", bcgs.Binomial(p=model.Qvac, n=model.Nt_exp, data=data['Nt_pos']))

Let's run a modestly long chain...

%time _,gchain = model.chain_dict_to_array( model.run_chain(10000) )

... and sneakily transform $Q_\mathrm{vac}$ back to $P_\mathrm{vac}$.

gchain[:,2] = 1.0 - gchain[:,2]

These plots show the values of each parameter as a function of the sample number. They may not mean much to you now, but will become familiar once we've covered MCMC diagnostics.

fig, ax = plt.subplots(gchain.shape[1], 1, figsize=(20, gchain.shape[1]*3));

cr.plot_traces(gchain, ax, labels=param_names);

For this problem, we are reasonably confident that no red flags will have appeared above. So, let's go on and find the 1D credible intervals from the samples. (The left column below will show raw histograms of samples for each parameter.)

fig, ax = plt.subplots(gchain.shape[1], 2, figsize=(9, gchain.shape[1]*3));

CIs_g = []

for i,a in enumerate(ax):

h = cr.whist(gchain[:,i], plot=a[0]); a[0].set_xlabel(param_names[i]);

CIs_g.append( cr.whist_ci(h, plot=a[1]) ); a[1].set_xlabel(param_names[i]);

CIs_g

Metropolis sampling¶

The second sampling method we will demonstrate is a more general one that doesn't rely on conjugacies existing in the model. Typically packages implementing this sampler require the user to provide a log-posterior function, although there are some (e.g. pymc) where the model can be defined in pseudocode as above. They generally also need not just initial parameter values but also a guess at the width of the posterior in each direction in parameter space (we use your guess and fractions of each guessed value below). This particular implementation will adapt its knowledge of the posterior's shape on the fly, once it gets started.

Below is some setup:

Pexp = lmc.Parameter(guess['Pexp'], 0.1*guess['Pexp'], 'Pexp')

Nt_exp = lmc.Parameter(guess['Nt_exp'], 0.1*guess['Nt_exp'], 'Nt_exp')

Pvac = lmc.Parameter(guess['Pvac'], 0.01*guess['Pvac'], 'Pvac')

def lmc_lnpost(junk=None):

return log_posterior(data, Pexp=Pexp(), Nt_exp=Nt_exp(), Pvac=Pvac(), **hyperparams)

space = lmc.ParameterSpace([Pexp, Nt_exp, Pvac], lmc_lnpost)

dict_chain = lmc.dictBackend()

v = lmc.Vehicle(space, dict_chain, updaterStep=lmc.Metropolis(proposal_length=lmc.randChiExp()))

Now we run a chain of the same length as before.

Nsteps = 10000

%time v(1,Nsteps)

This package keeps track of the log-posterior value associated with each position in parameter space (_LOGP_), in case that's ever useful.

dict_chain.keys()

Reformatting the chain into an array...

mchain = np.array([dict_chain[k] for k in dict_chain.keys()]).T

Finally, we can plot the traces of each parameter (plus _LOGP_), and compute the CIs as before.

fig, ax = plt.subplots(mchain.shape[1], 1, figsize=(20, mchain.shape[1]*3));

cr.plot_traces(mchain, ax, labels=[k for k in dict_chain.keys()]);

fig, ax = plt.subplots(len(param_names), 2, figsize=(9, len(param_names)*3));

CIs_m = []

for i,a in enumerate(ax):

h = cr.whist(mchain[:,i], plot=a[0]);

a[0].set_xlabel(param_names[i]);

CIs_m.append( cr.whist_ci(h, plot=a[1]) );

a[1].set_xlabel(param_names[i]);

You may notice qualitative differences from the Gibbs version of these plots; these reflects a trade-off between the methods that we'll cover later on.

CIs_m

Compare results¶

Having gone through all that, it makes sense to finish this notebook by comparing the "best values" and CIs of each parameter, as found by each method. Depending on details (the specific data set, grid size/extent, priors when comparing to maximum likelihood), you may see excellent or less-than-excellent agreement. In this particular case, I would trust the Gibbs sampling result most, so use that as a reference.

print("For each parameter: best value, 68.3% CI, 95.4% CI")

for i,name in enumerate(param_names):

print("\n" + name)

print('-----------')

print('Bayes grid: ', CIs[i]['mode'], np.array([CIs[i]['min'][0], CIs[i]['max'][0]]), np.array([CIs[i]['min'][1], CIs[i]['max'][1]]))

print('Gibbs sampling: ', CIs_g[i]['mode'], np.array([CIs_g[i]['min'][0], CIs_g[i]['max'][0]]), np.array([CIs_g[i]['min'][1], CIs_g[i]['max'][1]]))

print('Metropolis sampling:', CIs_m[i]['mode'], np.array([CIs_m[i]['min'][0], CIs_m[i]['max'][0]]), np.array([CIs_m[i]['min'][1], CIs_m[i]['max'][1]]))

print('Maximum likelihood: ', CIs_ml[i]['estimate'], np.array([CIs_ml[i]['min'][0], CIs_ml[i]['max'][0]]), np.array([CIs_ml[i]['min'][1], CIs_ml[i]['max'][1]]))

In fact, for a well designed grid in this problem, we'd be surprised to see differences of even 0.01 in the CI for $P_\mathrm{vac}$.

assert np.isclose(CIs[2]['min'][0], CIs_g[2]['min'][0], atol=0.01)

assert np.isclose(CIs[2]['max'][0], CIs_g[2]['max'][0], atol=0.01)

This cell will save your eyeballs some work by plotting the results together, with their order on the X axis being the same as in the list above.

plt.rcParams['figure.figsize'] = (20.0, 4.0)

fig, ax = plt.subplots(1,len(param_names))

for i,name in enumerate(param_names):

ax[i].plot(0, CIs[i]['mode'], 'o', color='C0');

ax[i].plot([0,0], [CIs[i]['min'][1], CIs[i]['max'][1]], '--', color='C0');

ax[i].plot([0,0], [CIs[i]['min'][0], CIs[i]['max'][0]], '-', color='C0');

ax[i].plot(1, CIs_g[i]['mode'], 'o', color='C1');

ax[i].plot([1,1], [CIs_g[i]['min'][1], CIs_g[i]['max'][1]], '--', color='C1');

ax[i].plot([1,1], [CIs_g[i]['min'][0], CIs_g[i]['max'][0]], '-', color='C1');

ax[i].plot(2, CIs_m[i]['mode'], 'o', color='C2');

ax[i].plot([2,2], [CIs_m[i]['min'][1], CIs_m[i]['max'][1]], '--', color='C2');

ax[i].plot([2,2], [CIs_m[i]['min'][0], CIs_m[i]['max'][0]], '-', color='C2');

ax[i].plot(3, CIs_ml[i]['estimate'], 'o', color='C3');

ax[i].plot([3,3], [CIs_ml[i]['min'][1], CIs_ml[i]['max'][1]], '--', color='C3');

ax[i].plot([3,3], [CIs_ml[i]['min'][0], CIs_ml[i]['max'][0]], '-', color='C3');

ax[i].set_ylabel(param_names[i]);

Parting thoughts¶

Returning to questions 2 and 3 posed at the beginning of the notebook, the answers are

- Yes, the information given in the reporting is enough to estimate the uncertainty in effectiveness (albeit with assumptions about the exact size of the control and treatment groups... you could free those parameters within some justifiable priors if you were so inclined, and I'm confident the answer would still be yes).

- The 95.4% constraint on $P_\mathrm{vac}$ found from the real data reported in the Times (with a uniform prior) was $P_\mathrm{vac}=0.95^{+0.03}_{-0.05}$. So, indeed, not nailed down to the percent level. On the other hand, something as low as 60% (the threshold for a vaccine to be considered "effective" enough for widespread adoption under the circumstances, if I remember) is exceptionally disfavored.

Beyond any historical interest, you have now worked through the first inference problem in this course that can't be entirely done on paper! Hopefully this has been good practice, and you now have an understanding of why the next section delves into computational methods that allow us to characterize posterior distributions without exhaustively evaluating them throughout their parameter spaces.